If your site has so-called “forms” (for example, a feedback form, an order form, the ability to add comments, etc.), you will sooner or later encounter a spam problem. And the longer your site exists, the more often you will receive letters from robots offering all kinds of nonsense.

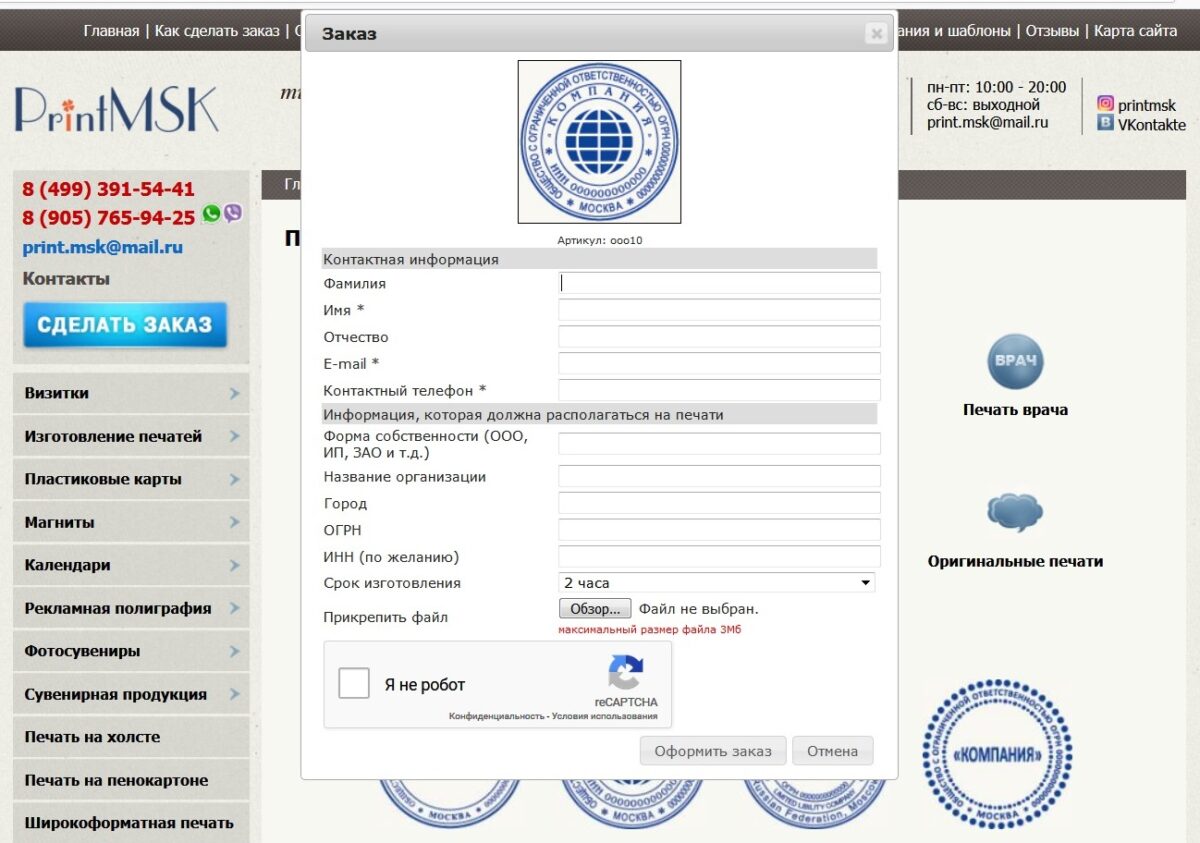

The site, whose owners are already literally suffocating from spam letters, has existed for a long time. For many requests, the site occupies the first lines in the search results and despite its very outdated design brings its owners a good income. But to separate every day flies from cutlets when on 10 real orders 100, and even more, spam letters come, they are tired of it. A website SEO specialist asked me if there was any way to reduce the flow of junk mail.

Google ReCaptcha was already installed on the site, but it did not help at all. My first impulse was just to update the captcha, but when I looked at the code… As I said, this site is not just old, it is very very old. I was in quiet horror. No frameworks, no CMS – just self-written engine on PHP version 5.3. Half of the functions are deprecated, windows-1251 encoding, variables that should have been moved to a separate config are scattered throughout the application, hosting without SSH…

I swore, I did not want to touch anything at all on this miraculously still working site. Realizing that the “simple update” of Google’s ReCaptcha could easily break everything here, I decided instead to use the old, but time-tested, way to give the robots a turn away.

And the method is elementary. Bots, as a rule, fill in all checkboxes in forms to pass checks for compliance with the privacy policy, etc. Therefore, we attach a checkbox to each form and hide it with CSS. A normal person does not see this checkbox and accordingly it will never be filled. But the robot … This one will fill the field for sure.

To be safe, I decided not to limit myself to one trap and set a second one, which was just as simple but effective.

In any form, there is such an attribute as action – this is, in fact, the address of the script where all the data that you fill in the form is sent to. A normal person, of course, does not need to know all this at all – he simply clicks on the “Submit” button and the data is transferred to the server. But bots, they are bots and follow completely different algorithms. They don’t press any buttons. The program script analyzes the attributes and all available fields in the form, generates a request based on this data and sends it directly to the server script responsible for their processing.

There are, of course, advanced robots that, imitating human actions, imitate mouse clicks right in the browser, but this is rare.

Knowing this feature, you can create another hidden field and use javascript to change its value when you click the “Submit” button. A human who submits a form by clicking a button will transmit one information to the server in this way, and a bot that does not click any button will transmit another. We carry out a simple check on the server and sweep away the robots.

I honestly warned the client that the method is working, but, of course, it does not give one hundred percent protection. If attackers want to spam a specific site, they will find a way. But the authors of most algorithms dealing with spam mailing focused on the maximum coverage of sites. They will not go into details about why such a site kicks them off. Their main task is to find a way to bypass the standard types of protection, and Google ReCaptcha is just that standard way. The client replied that if the amount of spam decreases twice, he will be happy.

Almost a month passed, I asked about the result of the work and heard the good news, I quote: “there is no spam!”. So, sometimes, the old-fashioned ways to protect against bots work better than the vaunted recaptures from the world-famous company.